submitted by /u/MLtinkerer

[visit reddit]

[comments]

Category: Misc

TF based animation

|

submitted by /u/BlakeYerian [visit reddit] [comments] |

NVIDIA has partnered with One Convergence to solve the problems associated with efficiently scaling on-premises or bare metal cloud deep learning systems.

NVIDIA has partnered with One Convergence to solve the problems associated with efficiently scaling on-premises or bare metal cloud deep learning systems.

NVIDIA has partnered with One Convergence to solve the problems associated with efficiently scaling on-premises or bare metal cloud deep learning systems.

Join this webinar to learn how NVIDIA SimNet addresses a wide range of use cases involving coupled forward simulations without any training data, as well as inverse and data assimilation problems.

Join this webinar to learn how NVIDIA SimNet addresses a wide range of use cases involving coupled forward simulations without any training data, as well as inverse and data assimilation problems.

Simulations are prevalent in science and engineering fields and have been recently advanced by physics-driven AI.

Join this webinar to learn how NVIDIA SimNet addresses a wide range of use cases involving coupled forward simulations without any training data, as well as inverse and data assimilation problems.

SimNet is integrated with parameterized constructive solid geometry as well as STL modules to generate point clouds and researchers can customize it with APIs to implement new geometry and physics. It also has advanced network architectures that are optimized for high-performance GPU computing and offers scalable performance for multi-GPU and multi-node implementations with accelerated linear algebra.

By attending this webinar, you’ll learn about:

- Neural network solver methodology and the SimNet architecture

- Real-world use cases, from challenging forward multi-physics simulations with turbulence and complex 3D geometries to industrial design optimization and inverse problems

- User implementation of two-phase flow in a porous media in SimNet

- SimNet results and what’s next for the toolkit

This post presents a snapshot of the system status in mid-2020 and highlights some of the work done by the PilotNet group.

This post presents a snapshot of the system status in mid-2020 and highlights some of the work done by the PilotNet group.

This post presents a snapshot of the system status in mid-2020 and highlights some of the work done by the PilotNet group.

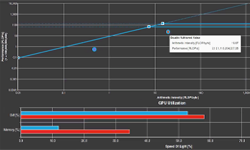

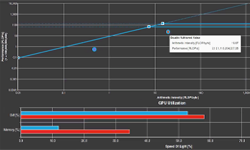

In this three-part series, you discover how to use NVIDIA Nsight Compute for iterative, analysis-driven optimization.

In this three-part series, you discover how to use NVIDIA Nsight Compute for iterative, analysis-driven optimization.

In this three-part series, you discover how to use NVIDIA Nsight Compute for iterative, analysis-driven optimization. Part 1 covers the background and setup needed, part 2 covers beginning the iterative optimization process, and part 3 covers finishing the analysis and optimization process and determining whether you have reached a reasonable stopping point.

In this post, we discuss the various considerations for enabling Tensor Cores in NVIDIA libraries.

In this post, we discuss the various considerations for enabling Tensor Cores in NVIDIA libraries.

In this post, we discuss the various considerations for enabling Tensor Cores in NVIDIA libraries.

We love PC games. The newest titles and the greatest classics. FPS, RPG, grand strategy, squad-based tactics, single-player, multiplayer, MMO — you name it, we love it. There are more than 800 games on GeForce NOW — including 80 of the biggest free-to-play games — streaming straight from the cloud. And thanks to the explosive Read article >

The post New Games, New Features — That’s GFN Thursday appeared first on The Official NVIDIA Blog.

Hey! Sorry, if this question does not make 100% sense as my

education has not yet reached formal ML classes, but I’ll ask

nonetheless.

I want to make a GAN in tensorflow, but instead of just copy and

pasting someone’s code, I want to truly understand the bits and

parts of it.

From what I know about Naive Bayes, it predicts the distribution

of our original data – but after each iteration how can one sample

from this distribution, and additionally once you take a sample

from this distribution, how can we actually in code pass it to our

discriminator?

Thanks everyone 🙂

submitted by /u/20gunasart

[visit reddit]

[comments]

|

| submitted by /u/ep_es_ [visit reddit] [comments] |